问题

平时用k8s进行开发测试时,如果使用了rbd的snapshot,并且清理不及时的话,或者清理方法有问题,可能会遇到以下的情况:

- VolumeSnapshotContent不多

- 但是Ceph的snapshot很多

最终可能会导致Ceph不可用,比如因为disk空间不够。

背景知识

Ceph rbd快照

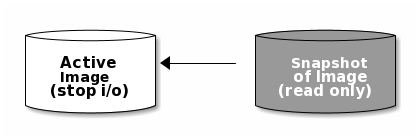

Ceph的rbd快照从定义来看,就是一个只读的image。Ceph官方文档建议在创建snapshot的时候把应用的IO停掉,或者先执行fsfreeze,这样得到的就是一个数据一致可用的快照。

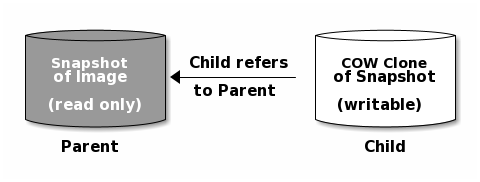

由于Ceph image的layering设计,可以支持在一个快照之上快速的创建出一个可以读写的clone。

一个快照的clone从行为上跟一个普通的rbd image一样,可以读写、创建clone等。

VolumeSnapshotContent和rbd快照

通过CSI创建一个PV的VolumeSnapshot之后,会得到一个VolumeSnapshotContent,例如:

[root@sandrider~ ]# kubectl get volumesnapshotcontents.snapshot.storage.k8s.io snapcontent-062c610c-0c73-4963-9909-a5ff5c480234 -o yaml

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshotContent

...

...

status:

creationTime: 1656475215498012908

readyToUse: true

restoreSize: 5368709120

snapshotHandle: 0001-0009-rook-ceph-0000000000000001-fb1a79b8-f75f-11ec-925f-bafec6769230

这里的snapshotHandle就指向一个Ceph的rbd device。进入Rook Ceph的toolbox查看某个snapshot image:

sh-4.4$ rbd ls -l replicapool | grep snap | grep fb1a79b8-f75f-11ec-925f-bafec6769230

csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230

接着可以通过rbd info来查看这个snapshot的信息:

sh-4.4$ rbd info replicapool/csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230

rbd image 'csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230':

size 5 GiB in 1280 objects

order 22 (4 MiB objects)

snapshot_count: 1

id: 1f461bdf3d46fc

block_name_prefix: rbd_data.1f461bdf3d46fc

format: 2

features: layering, deep-flatten, operations

op_features: clone-child

flags:

create_timestamp: Wed Jun 29 04:00:15 2022

access_timestamp: Wed Jun 29 04:01:54 2022

modify_timestamp: Wed Jun 29 04:00:15 2022

parent: replicapool/csi-vol-054bf61a-f536-11ec-97e2-727ae8d37c4c@075d721c-9e5d-496c-814f-3c65f364a34e

overlap: 5 GiB

这时要注意的是,rbd在创建VolumeSnapshot的过程中,实际上一个VolumeSnapshot会对应创建出一个rbd snapshot和一个rbd的image,可以通过rbd ls -l看到:

sh-4.4$ rbd ls -l replicapool | grep snap | grep fb1a79b8-f75f-11ec-925f-bafec6769230

csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230 5 GiB replicapool/csi-vol-054bf61a-f536-11ec-97e2-727ae8d37c4c@075d721c-9e5d-496c-814f-3c65f364a34e 2

csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230@csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230 5 GiB replicapool/csi-vol-054bf61a-f536-11ec-97e2-727ae8d37c4c@075d721c-9e5d-496c-814f-3c65f364a34e 2

对应了两条记录,其中第一个csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230应该是rbd image,第二个csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230@csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230才是rbd snapshot。

分别通过rbd info查看:

sh-4.4$ rbd info replicapool/csi-snap-8ce9ced7-f81a-11ec-925f-bafec6769230

rbd image 'csi-snap-8ce9ced7-f81a-11ec-925f-bafec6769230':

size 5 GiB in 1280 objects

order 22 (4 MiB objects)

snapshot_count: 1

id: 1e97c38c9c5d0d

block_name_prefix: rbd_data.1e97c38c9c5d0d

format: 2

features: layering, deep-flatten, operations

op_features: clone-child

flags:

create_timestamp: Thu Jun 30 02:15:45 2022

access_timestamp: Thu Jun 30 02:18:40 2022

modify_timestamp: Thu Jun 30 02:15:45 2022

parent: replicapool/csi-vol-054bf61a-f536-11ec-97e2-727ae8d37c4c@f6958495-d367-450b-8e8c-164607ee0816

overlap: 5 GiB

下面是rbd snapshot csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230@csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230:

sh-4.4$ rbd info replicapool/csi-snap-8ce9ced7-f81a-11ec-925f-bafec6769230@csi-snap-8ce9ced7-f81a-11ec-925f-bafec6769230

rbd image 'csi-snap-8ce9ced7-f81a-11ec-925f-bafec6769230':

size 5 GiB in 1280 objects

order 22 (4 MiB objects)

snapshot_count: 1

id: 1e97c38c9c5d0d

block_name_prefix: rbd_data.1e97c38c9c5d0d

format: 2

features: layering, deep-flatten, operations

op_features: clone-child

flags:

create_timestamp: Thu Jun 30 02:15:45 2022

access_timestamp: Thu Jun 30 02:18:40 2022

modify_timestamp: Thu Jun 30 02:15:45 2022

protected: False

parent: replicapool/csi-vol-054bf61a-f536-11ec-97e2-727ae8d37c4c@f6958495-d367-450b-8e8c-164607ee0816

overlap: 5 GiB

除了snapshot的信息多了一个protected: False之外,其他信息都一样。上面两个都是一个child的clone,都指向同一个parent:

sh-4.4$ rbd info replicapool/csi-vol-054bf61a-f536-11ec-97e2-727ae8d37c4c

rbd image 'csi-vol-054bf61a-f536-11ec-97e2-727ae8d37c4c':

size 5 GiB in 1280 objects

order 22 (4 MiB objects)

snapshot_count: 4

id: 16fa679a999612

block_name_prefix: rbd_data.16fa679a999612

format: 2

features: layering, operations

op_features: clone-parent, snap-trash

flags:

create_timestamp: Sun Jun 26 09:54:50 2022

access_timestamp: Thu Jun 30 16:02:12 2022

modify_timestamp: Sun Jun 26 09:54:50 2022

由此可以推断,在创建VolumeSnapshot的时候,ceph的rbd实际上创建了一个只读的snapshot,又创建了一个snapshot的clone,这个clone跟一个普通的image表现一样。

删除一个rbd snapshot

- 假设用户误删了一个VolumeSnapshotContent

[root@~ ]# kubectl get volumesnapshotcontents.snapshot.storage.k8s.io snapcontent-062c610c-0c73-4963-9909-a5ff5c480234 -o yaml

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshotContent

...

uid: fb5744f8-c747-4f94-8e6a-8c33cebfe6c1

spec:

deletionPolicy: Retain

...

snapshotHandle: 0001-0009-rook-ceph-0000000000000001-fb1a79b8-f75f-11ec-925f-bafec6769230

在Retain状态下删掉一个VolumeSnapshotContent:

[root@~ ]# kubectl delete volumesnapshotcontents.snapshot.storage.k8s.io snapcontent-062c610c-0c73-4963-9909-a5ff5c480234

volumesnapshotcontent.snapshot.storage.k8s.io "snapcontent-062c610c-0c73-4963-9909-a5ff5c480234" deleted

- 查看rbd snapshot状态 这时发现这个snapshot还在:

sh-4.4$ rbd ls -l replicapool | grep snap | grep fb1a79b8-f75f-11ec-925f-bafec6769230

csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230 5 GiB replicapool/csi-vol-054bf61a-f536-11ec-97e2-727ae8d37c4c@075d721c-9e5d-496c-814f-3c65f364a34e 2

csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230@csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230 5 GiB replicapool/csi-vol-054bf61a-f536-11ec-97e2-727ae8d37c4c@075d721c-9e5d-496c-814f-3c65f364a34e 2

对应了两条记录,其中第一个csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230是clone image,第二个csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230@csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230是rbd snapshot。

- 删除rbd snapshot 删除时,对rbd snapshot和rbd image要分别进行删除:

sh-4.4$ rbd snap rm replicapool/csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230@csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230

Removing snap: 100% complete...done.

sh-4.4$ rbd rm replicapool/csi-snap-fb1a79b8-f75f-11ec-925f-bafec6769230

Removing image: 100% complete...done.

删除完,可以通过rbd ls进行确认。

删除多个rbd snapshot

- 进入ceph的toolbox pod

kubectl exec -it -n rook-ceph rook-ceph-tools-xxx-xxx -- sh

- 查看现有的snapshot

rbd ls replicapool | grep snap | wc -l

以上命令可以判断出snapshot的数量,如果超过几十个,但是VolumeSnapshotContent又没几个,就可以考虑清理了。

- 清理

清理的时候,如果VolumeSnapshotContent数量比较少,那推荐先把所有的VolumeSnapshot和VolumeSnapshotContent都删掉,然后再做ceph snapshot的清理。否则还得exclude出不需要清理的rbd snapshot。

主要清理的命令如下:

- 清理snapshot

rbd ls -l replicapool | grep snap | awk 'BEGIN {s2="replicapool";OFS="/"} {print s2,$1}' | grep @ | xargs -n 1 rbd snap rm

- 清理snapshot的clone

rbd ls -l replicapool | grep snap | awk 'BEGIN {s2="replicapool";OFS="/"} {print s2,$1}' | grep -v @ | xargs -n 1 rbd rm

参考

rbd snapshot

rbd examples